Crawl Budget in SEO: How to Find and Fix Issues

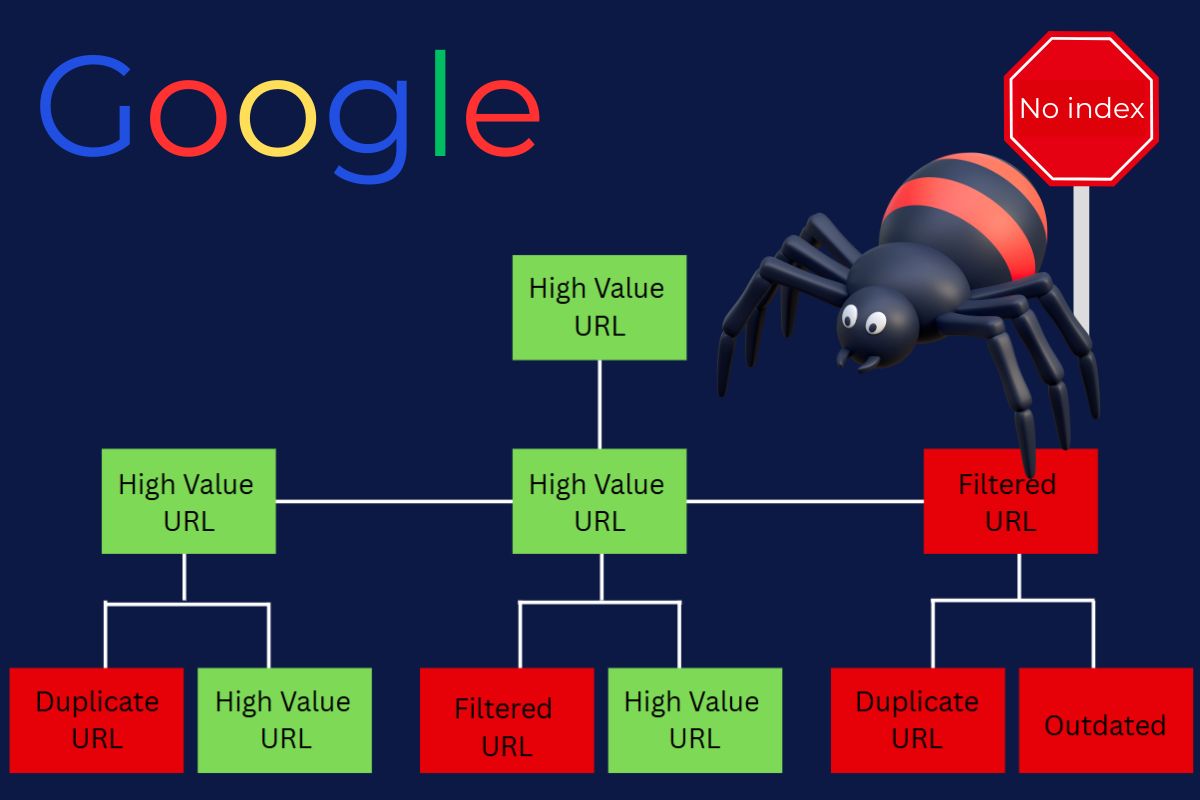

If pages aren’t getting indexed, the issue might not be content quality—it could be a problem with crawl budget. Search engines like Google allocate a limited number of pages they’ll crawl from a site within a given timeframe. When that crawl budget isn’t used efficiently, important pages get missed, rankings suffer, and traffic slows down.

What Is Crawl Budget in SEO and Why Does It Matter

The SEO crawl budget is the number of URLs Googlebot is willing and able to crawl on your site. It’s not unlimited. For small sites, it’s rarely a concern. But once a store grows past a few hundred URLs—especially with dynamic pages, faceted navigation, or lots of thin content—crawl budget can quietly become a real bottleneck.

So, what is crawl budget in SEO exactly? It’s made up of two main parts:

- Crawl rate limit: How often Googlebot can crawl without overloading the server.

- Crawl demand: How much Google wants to crawl based on page popularity and freshness.

If you’re running an e-commerce store with thousands of SKUs (Stock Keeping Units), variants, blog pages, and outdated URLs, these two forces start to overlap and cause problems quickly. That’s when crawl budget problems start to hit hard. The worst part? You usually don’t notice until rankings drop, or never show up in the first place.

How to Tell If Crawl Budget Is Holding You Back

Crawl budget issues aren’t always obvious. But there are clear signs when search engines are struggling to index your site efficiently. Knowing how to check crawl budget is the first step.

Signals You Might Have a Crawl Budget Problem

- Pages aren’t getting indexed even after weeks of being live.

- Google Search Console (GCS) shows a large gap between “Discovered” and “Indexed” URLs.

- Server logs reveal Googlebot hitting irrelevant pages like filters, tags, or old promo URLs.

- Crawl stats in GSC show fluctuations or sudden drops in crawl activity.

- Important pages are ranking inconsistently or not at all.

These patterns often emerge on large e-commerce sites with thousands of URLs, especially when faceted navigation, auto-generated pages, or outdated product listings overload the index.

Where to Check Crawl Data

To improve crawl budget, data has to guide the process. Here’s where to dig in:

- Google Search Console: Crawl stats report, Coverage report, and URL Inspection Tool.

- Server logs: These show exactly which pages Googlebot hits and how often.

- Third-party tools like Screaming Frog or Sitebulb can simulate crawls and highlight issues.

Tracking this data helps pinpoint waste, like low-value pages eating up resources, and shows whether fixes are making an impact.

To get a deeper understanding of how search engines determine which pages to prioritize, check out this breakdown on how search engine positioning works.

What Affects Crawl Budget (And What to Fix First)

Understanding the root of the problem is what unlocks real results. If the goal is to increase crawl budget, you need to address the factors that are actively wasting it.

The 3 Factors That Influence Your Crawl Budget

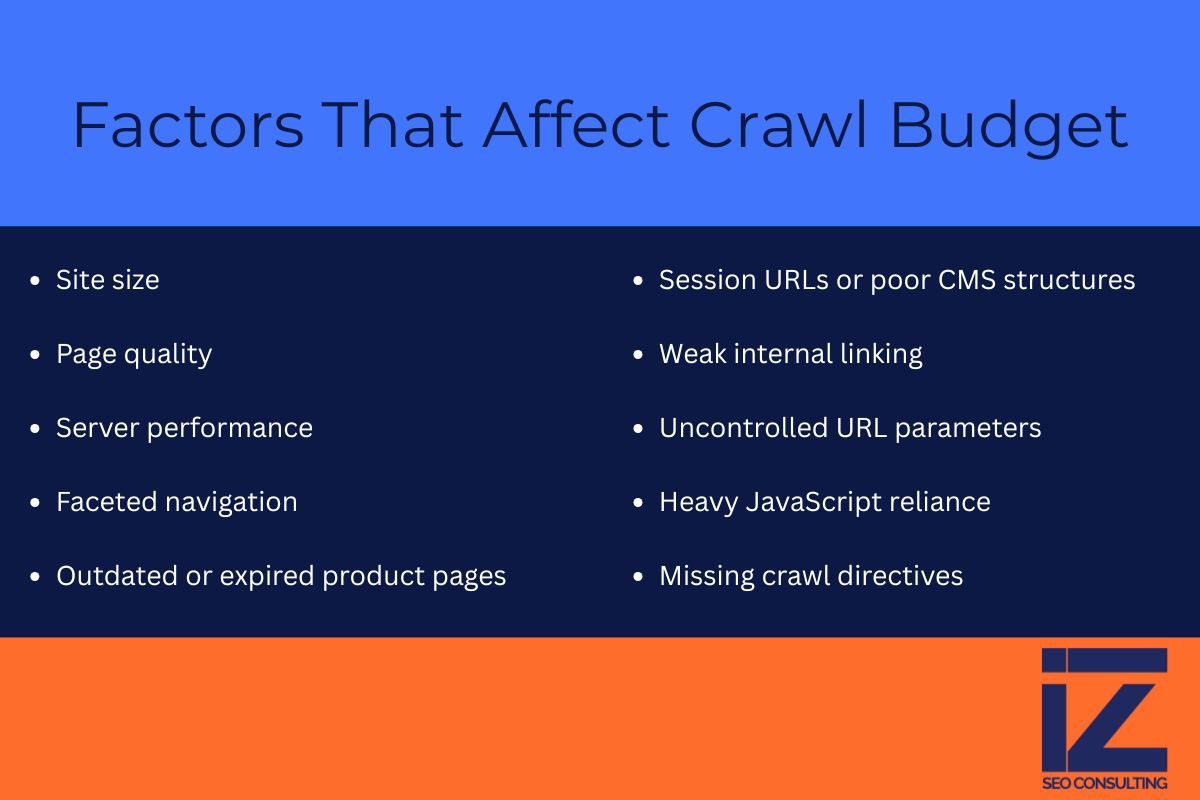

According to Google, the 3 factors that influence your crawl budget the most are:

- Site size – The larger the site, the more URLs to crawl. Sites with tens of thousands of pages (think product variants, collections, tag pages) burn through crawl budget fast.

- Page quality – Google deprioritizes low-value pages. Too many thin or duplicate pages (like out-of-stock products or boilerplate content) can drag the whole site down.

- Server performance – Slow or unstable hosting makes Googlebot back off. If the server times out, expect crawling to drop immediately.

But beyond Google’s list, e-commerce brings its own challenges.

Hidden Crawl Budget Drains in E-Commerce

Dropshipping platforms and catalog-based stores often generate pages automatically. This is where things go wrong:

- Faceted navigation: Filtering by color, size, price, etc., can explode the number of crawlable URLs with no real content difference.

- Outdated product pages: If you don’t clean them up, they keep getting crawled even when products are gone.

- Session URLs or duplicate content from poor CMS setups or third-party apps.

Extra Crawl Budget Issues to Be Aware Of

In addition to the main issues, these technical problems can also reduce your SEO crawl budget over time:

- Weak internal linking: If valuable pages aren’t properly linked, they’re treated as lower priority. Googlebot may crawl them less often or miss them entirely.

- Uncontrolled URL parameters: URLs with tracking codes (

?utm=,?sort=, etc.) can create hundreds of near-duplicate pages. Without proper canonical tags or parameter handling, they soak up crawl resources fast. - Heavy JavaScript reliance: Sites that rely on JavaScript for loading content or navigation can confuse Googlebot. If rendering isn’t handled correctly, even important content may never be crawled.

These technical details don’t just affect visibility—they waste resources. Optimizing them helps increase crawl budget efficiency and keeps Google focused on what really matters: your converting, high-value pages.

How to Optimize Crawl Budget on Large E-Commerce Sites

Fixing crawl budget issues isn’t just about knowing the problem—it’s about taking the right steps to solve it without messing up your store’s structure.. Here’s how to optimize crawl budget while keeping SEO intact.

Practical Steps to Improve Crawl Efficiency

These tactics help you get more valuable pages crawled without overwhelming Googlebot:

- Block junk URLs: Use robots.txt to stop crawling cart pages, search results, and dynamic URLs created by filters.

- Consolidate duplicate content: Canonical tags, redirects, and content pruning help reduce waste.

- Prioritize internal linking: Strong internal links to your most valuable pages signal importance to Google.

- Clean up outdated URLs: Noindex or remove dead product pages that no longer serve a purpose.

- Submit updated sitemaps regularly: Help search engines focus on fresh, priority URLs.

Even small technical changes can shift crawl budget from low-value pages toward high-converting content, especially for stores with large inventories.

Pro Tip: One insider strategy many overlook—use log file analysis to find high-frequency crawl paths. Often, Google is spending crawl resources on pages you didn’t intend to highlight. Adjust internal links or update robots rules based on that insight.

Final Thoughts: Start Taking Crawl Budget Seriously

Most e-commerce sites don’t realize how much traffic they lose due to crawl issues. Pages don’t get indexed—not because they’re bad, but because they never got seen. Fixing crawl budget in SEO is one of the most underrated technical wins out there.

When should you worry about the crawl budget? — The answer is simple: once your site crosses a few hundred URLs and your indexing starts to lag. And especially if your content strategy relies on automation, product feeds, or third-party integrations, crawl waste is almost guaranteed.

Here’s the takeaway:

- Track and monitor crawl behavior via Google Search Console and log files.

- Identify and remove crawl traps, duplicate URLs, and dead content.

- Make it easier for Googlebot to find your best pages, then keep them fresh and internally linked.

Looking for expert guidance? Indexed Zone SEO offers hands-on help with technical SEO and crawl budget optimization tailored to growing brands.

Understanding the crawl budget definition and managing it smartly can be the difference between flatline traffic and consistent growth. For larger stores, this isn’t just a best practice—it’s a competitive edge.

FAQ: Crawl Budget in SEO

How often does Google update a site’s crawl budget?

There’s no fixed schedule. Crawl budget changes based on site health, server performance, and how frequently your content changes. If your site improves in speed, structure, or popularity, Google may revisit more often. But if it detects issues, it may slow down crawling without notice.

Can the crawl budget impact new product pages more than old ones?

Yes, especially if older pages already have backlinks or history. New product pages without internal links or sitemap visibility can easily be skipped if your SEO crawl budget is maxed out. That’s why internal linking and clean architecture are critical for surfacing fresh content.

Is the crawl budget the same across all search engines?

Not exactly. While Google’s approach is the most documented, other engines like Bing or Yandex also use crawl limits, but the rules differ. Google tends to be more aggressive with crawling larger, active sites, while others may be more conservative or slower to re-crawl changes.

Can noindex tags help with crawl budget?

Yes—but only indirectly. Noindex tells Google not to index a page, but it doesn’t always stop crawling. To save crawl budget, combine noindex with disallow in robots.txt or remove the page from internal links. Otherwise, Google might keep crawling it even though it won’t index it.

What’s the best way to monitor crawl budget changes over time?

The most consistent way is tracking crawl stats in Google Search Console, combined with server log analysis. GSC shows overall trends, while logs show exactly which URLs are getting crawled and when. Watching both gives a full picture of how your crawl budget SEO is performing.