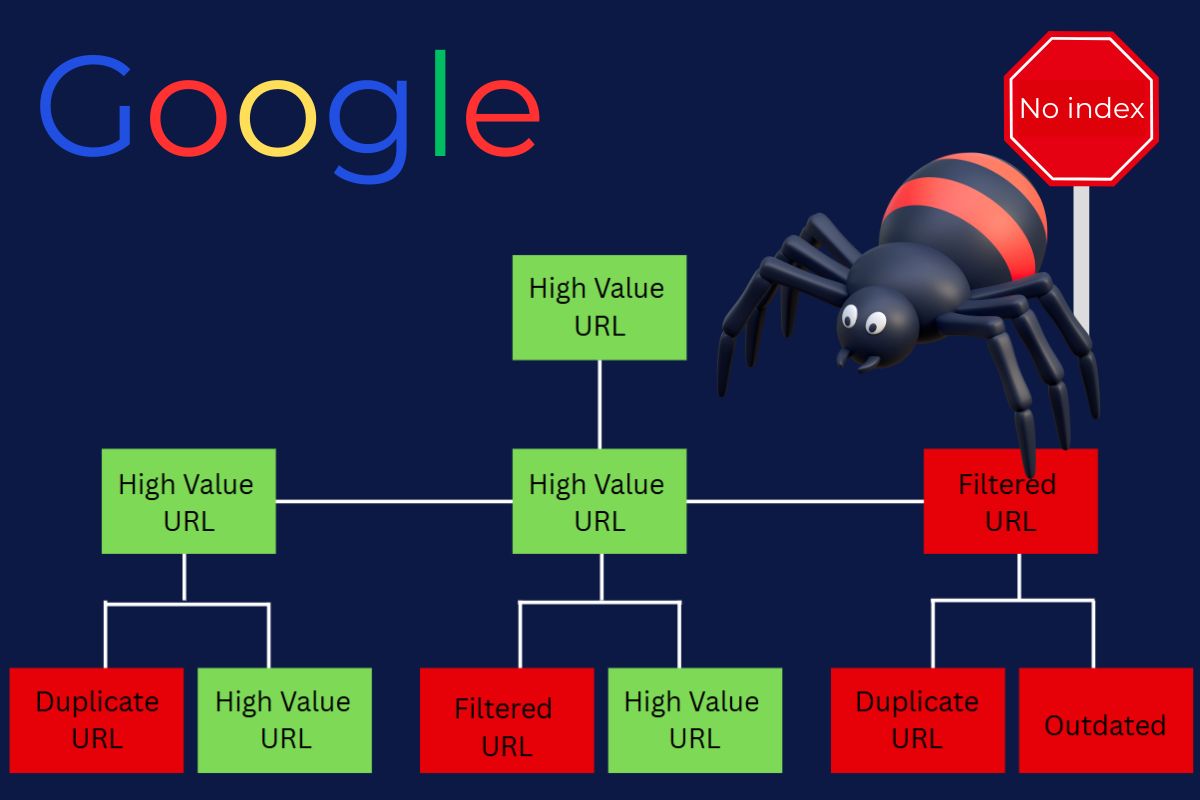

Ever wondered why some of your pages just won’t show up in Google search results? You might be dealing with a crawl budget in SEO problem rather than poor content. Search engines like Google only visit a certain number of your website’s pages during each crawl session. When this SEO crawl budget gets wasted on the wrong pages, your most important content gets left behind, your rankings drop, and organic traffic takes a hit.

What Is Crawl Budget in SEO and Why Does It Matter

Here’s the crawl budget definition in simple terms: it’s the total number of pages that search engines like Googlebot are willing and able to crawl on your website during a specific time period. This budget depends on your server’s capabilities and how much Google actually wants to explore your content. When you waste this budget on low-quality pages, your valuable content remains invisible.

Picture your crawl budget like a pizza delivery driver who can only make 20 stops per shift. If they waste time delivering to empty houses or wrong addresses, your hot pizza arrives cold—or doesn’t arrive at all.

Crawl Budget Meaning: The Two Core Components

Google’s own documentation breaks down what is crawl budget into two essential parts:

- Crawl rate limit – This is the maximum number of requests Googlebot can send to your server without causing it to crash or slow down significantly.

- Crawl demand – This represents how eager Google is to crawl your pages based on factors like popularity, how fresh your content is, and the quality of links pointing to those pages.

💡 Real-world scenario: Imagine you’re running an online store with 20,000 different products, but Googlebot only visits 2,000 pages each day. At that rate, it could take more than 10 days for a new product to even get discovered, let alone indexed and ranked.

The bottom line: Smaller websites with fewer than 500 pages rarely face crawl budget issues. However, if you’re managing a large e-commerce site, an extensive blog, or a SaaS platform with thousands of URLs, understanding how to increase crawl budget becomes absolutely critical for your SEO success.

📌 Curious about how crawl budget ties into your overall search visibility? Check out our comprehensive guide on how search engine positioning works.

How to Tell If Crawl Budget Is Holding You Back

You’re probably dealing with crawl budget problems if your new pages take weeks to get indexed, Google Search Console reveals a massive gap between “Discovered” and “Indexed” pages, or your server logs show Googlebot getting distracted by duplicate pages and useless filters.

Warning Signs of Crawl Budget Problems

Here’s what to watch for:

- New pages sitting unindexed for weeks or months

- Google Search Console showing way more discovered URLs than indexed ones

- Server logs revealing Googlebot repeatedly crawling filter pages, tag archives, or expired promotional URLs

- Your crawl statistics report showing sudden drops or wild fluctuations

- High-value pages that should rank well are performing inconsistently or not showing up at all

One fashion retailer discovered that only 65% of their product pages were being indexed. After implementing robots.txt blocks on their faceted navigation filters, indexation rates increased to 92% in just 30 days.

✅ Pro insight: Server log analysis often reveals shocking crawl budget waste. You might discover Google hitting irrelevant pages hundreds of times each month while ignoring your money-making content.

Where to Check Crawl Data

To properly analyze your crawl budget, start with Google Search Console’s crawl statistics and coverage reports, then dig deeper with server log analysis. SEO crawling tools can also simulate Google’s behavior to spot potential issues.

Your Main Data Sources

- Google Search Console – Your first stop for crawl stats, coverage reports, and URL inspection tools.

- Server logs – These show you exactly which pages Googlebot visits and how frequently (the real goldmine).

- SEO tools like Screaming Frog or Sitebulb – Perfect for running crawl simulations that catch duplicate content and weak internal linking.

📊 Here’s a concrete example: If Google Search Console shows 10,000 URLs discovered but only 5,000 actually indexed, you’re definitely burning through your crawl budget inefficiently.

📌 Want to dive deeper into how these metrics connect to your overall SEO performance? Our guide on SEO metrics breaks it down perfectly.

What Affects Crawl Budget (And What to Fix First)

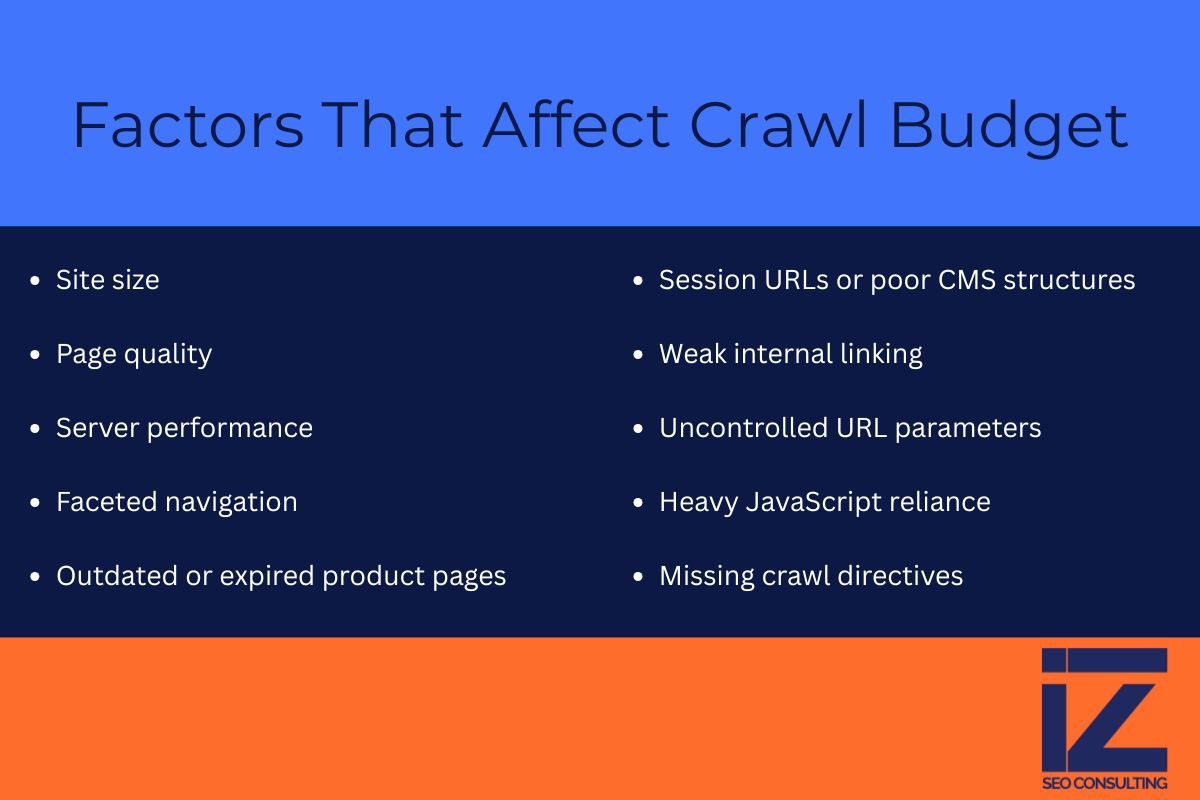

The 3 factors that influence your crawl budget most dramatically are your website’s size, the quality of your pages, and how well your server performs. E-commerce sites face additional challenges with faceted navigation systems and outdated product listings.

The Core Factors Behind Crawl Budget Allocation

- Website size – Larger sites naturally face bigger crawl budget challenges since there’s more content to evaluate.

- Page quality – Thin content, duplicate pages, and low-value URLs drain your budget faster than a leaky bucket.

- Server performance – When your server responds slowly or times out frequently, Googlebot reduces how often it visits.

📌 Google officially confirms these as the primary factors affecting crawl budget allocation.

Large Site Reality Check:

✅ The upside: Massive product catalogs mean huge search traffic potential.

❌ The downside: Auto-generated URLs can create crawl budget disasters if left unchecked.

Hidden Crawl Budget Killers in E-Commerce

- Faceted navigation – Those handy filter options for color, size, and price can generate 100,000+ near-identical URLs that confuse search engines.

- Zombie product pages – Discontinued items that still live on your site continue eating up crawl budget even though they serve no purpose.

- Session IDs and messy CMS configurations – These create endless duplicate page variations that waste precious crawl resources.

Additional Crawl Budget Drains

- Weak internal linking structure – Pages with poor internal links get treated as low-priority by Googlebot.

- Unmanaged URL parameters – Things like ?utm=, ?sort=, and ?filter= create countless duplicate versions of the same content.

- JavaScript-heavy pages – Poor rendering can hide your content from crawlers entirely.

The key insight: Large websites burn through crawl budget incredibly fast. Without actively pruning low-value pages and strengthening your internal linking strategy, your crawl budget in SEO becomes a major growth bottleneck.

How to Optimize Crawl Budget on Large E-Commerce Sites

Want to improve crawl budget efficiency? Focus on blocking worthless URLs, consolidating duplicate content, strengthening internal links to priority pages, removing dead weight, and keeping your sitemaps fresh.

Actionable Steps for Better Crawl Efficiency

- Block worthless URLs using robots.txt – Stop wasting budget on cart pages, search result pages, and admin URLs.

- Consolidate duplicates – Use canonical tags and 301 redirects to point crawlers toward the definitive version of each page.

- Strengthen internal linking to money pages – Make sure your most important pages get the link equity they deserve.

- Clean house regularly – Remove or noindex outdated URLs that no longer serve your business goals.

- Keep XML sitemaps current – Submit fresh sitemaps regularly to guide Googlebot toward your priority content.

Real success story: After blocking cart and search pages while removing 5,000 outdated product listings, one SaaS directory reduced crawl waste by 40% and saw an 18% increase in indexed pages within six weeks.

FAQ: Crawl Budget in SEO

How often does Google update the crawl budget?

There’s no fixed schedule. It depends on your server health, how popular your site is, and how frequently you update content.

Does crawl budget affect new vs. old pages differently?

Absolutely. Established pages with quality backlinks get crawled more frequently. New pages need strong internal links and sitemap inclusion to get noticed.

Is crawl budget the same across all search engines?

Not at all. Google tends to be more aggressive with crawling, while Bing and Yandex are typically more conservative.

Do noindex tags help with crawl budget?

Only indirectly. Noindex tags still allow crawling to happen. Use robots.txt disallow directives for true crawl budget savings.

What’s the best way to monitor crawl budget?

Combine Google Search Console crawl stats with server log analysis. Together, they show whether your optimization efforts are actually working.

Final Thoughts: Start Taking Crawl Budget Seriously

You should start worrying about crawl budget when your site grows beyond a few hundred pages and you notice indexing delays. For e-commerce businesses, automated systems and product feeds almost guarantee crawl budget waste without proper management.

When Should You Worry About Crawl Budget?

- Once your website exceeds several hundred URLs

- When Google Search Console shows consistent indexing delays

- If new products or blog posts aren’t appearing in search results quickly enough

The reality is this: Managing your SEO crawl budget effectively can be the difference between stagnant organic growth and explosive scaling.

Ready to Take Action? Don’t let inefficient crawl budget management hold your website back. Crawl budget optimization isn’t just a technical exercise—it’s a business growth strategy. Ready to get serious about results? Partner with Indexed Zone SEO for a customized approach that delivers measurable improvements.